Potato chips recognizer with Google AutoML

I have two passions. Potato chips and artificial intelligence. So why not combine those to show how easy it is to build your own AI models?

I could do that by crafting an AI, that can accurately predict different brands of potato chips. So that’s what I’m going to do here. I’ll show you how you can make your own AI models for your business or for fun. All this without coding.

I built this AI on Google AutoML. By doing that I only had to collect data and upload it to Google that in turn trained the model and deployed it ready to use. If you want a deeper discussion on AutoML you can read my post here.

You might wonder how expensive or difficult it was. Actually the total cost was around 40 EUR and about three hours of work.

The result was pretty amazing. Even though I almost ate the training data before getting started I managed to make a model that accurately recognized the different kinds of chips.

Why is this even relevant to you?

This project might sound silly but you shouldn’t underestimate the business potential in this easy access to custom AI. If you have a business with a lot of spare parts that employees have to identify on the go, identify mistakes on an assembly line or other visual problems with high frequency then you can save a lot of money with very little effort.

Besides the visual problems as the one I’m tackling here you can also make your own Language models or forecast based on data in a database.

How I did it

Now for the fun stuff. I’ll go through the problem I’m trying to solve, how I got training data, how I trained the model and how I evaluated it.

The problem

So the problem is simple. I chose four different kinds of potato chips that I wanted the AI to be able to differentiate. To make the problem a little harder I chose two of them to be very similar.

The images below are respectively “snack chips original” and “snack chips sour cream and onion”. As you can see they look very similar.

Getting training data

As I’m writing this I’m devouring the remaining training data. I’m a little sick of potato chips now but not ashamed at all. It’s a sacrifice I had to make in the name of AI.

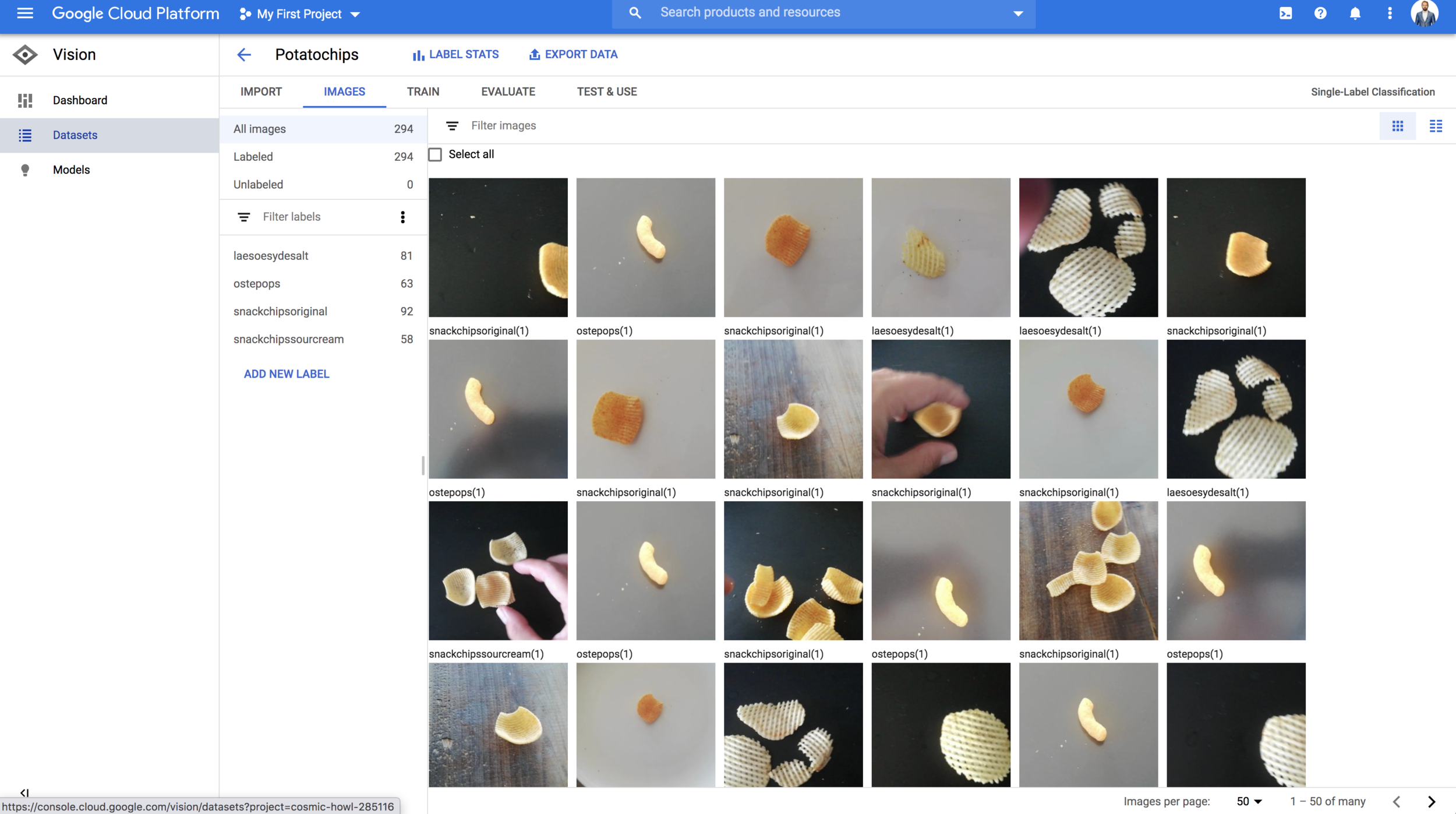

Google recommends around 100 different examples for each label. I was lazy so I went a little below that with 289 images in total with 4 different labels.

Taking 289 would take a while so I did some cheating that you should do if you want to do a similar case. Instead of taking pictures I took video with my smartphone and extracted the images from the video.

I used VLC player to extract the frames. VLC has a setting in video filters to extract frames from videos but I guess there is a lot of software out there that can do this for free.

After getting the images for training data ready I simply logged in to Google Cloud and enabled the AutoML Vision API.

After that I created a new dataset. In this case I chose single-label classification since it’s the simplest solution for the use case. If you need to identify several different object or even get a bounding box for the position of the object then that is a possibility too.

With the dataset created I know just bulk uploaded potato chips with the UI and put on labels.

Easy right? The entire dataset is now ready.

Training

After getting data I trained the model. I simply clicked “Train new model” and got presented with a few different options. First I chose Cloud hosted. This is for getting the model hosted at Google. ”Edge”, the alternative, is if you want to deploy on devices such as Raspberry Pi.

Next I chose the recommended node hour budget. One node hour is about 3 USD. I also clicked “deploy model” so it’s ready to use.

When clicking start training the model’s training. In my experience this is 2-3 hours. Google will send you an email when it’s done, so you don’t have to sit there waiting.

The costs

I wrote in the introduction that this project had a cost of 40 EUR and three hours of work. To give you a better picture then here’s the costs in a bit more detail.

Potato chips: 10 EUR.

Training the model: 30 EUR.

There will also be some costs associated with hosting data, calling the Gcloud API when using the model and hosting the model. I haven’t put these costs in since they vary a lot based on the use case. That being said it’s usually not a significant cost.

As a little bonus I actually didn’t spend any real money on this project. When you sign up for Google Cloud they give you a 300 USD voucher to spend on

Results

Alright. Now for the results. The model actually did pretty well. I expected it to have a lot of trouble with the similar looking kind of chips but it usually guessed the right one with more that 80% confidence. Pretty nice for a few hours of work.

I tested the confidence directly in the cloud UI as a sanity check.

When the model is ready you also get some analytics about the model.

Confusion matrix, recall and precision are really interesting metrics to look into when analyzing your model. If you want to utilize AI in your business you should definitely spend some time on this. I wrote a post about this subject here.

Connecting to other services

Since Google deploy and have an API ready you can from here implement the service into existing web services, mobile apps or other systems. This is really simple and this integration should take long for a developer.

Final notes

I did this to show how accessible AI really is and hopefully more business will invest in this technology now that the barriers are so low.

That being said I am a big advocate for the idea that the technical problem in fields like AI is usually the easiest part. Everything around the AI such as the people and processes is usually where the problems start showing. So before you jump right into building AI, spend some time on how it will affect the employees using it and what processes you might want to change. That is usually a very rewardful exercise no matter the technology.

Have fun building and let me know if you have any fun project ideas I should try out.